Echo Netrunner: Creating a Fluid Skin Shader with SDFs and Skin Segmentation in Spark AR

- Dn Cherry

- Jul 1, 2022

- 4 min read

From September 14 to October 13, Facebook hosted the Cyberware Challenge centered around Cyberpunk 2077 (the video game) inspired augmented reality (AR) filters utilizing their software Spark AR. Numerous competitions have been happening within the Spark AR ecosystem, all evidence of Facebook’s, excuse me, Meta’s push for extended reality experiences.

My Echo Netrunner filter was one of my most popular effects from the challenge that moved me up in the ranking. My final rank was 201 achieving a $500 reward, not too shabby for someone with less than 500 followers.

Below I dive into the inspiration and techniques for the design. Try out my Echo Netrunner filter yourself & let me know what you think.

Inspiration

How far would you mod? With the echo netrunner mod, you can deep dive into the cyber web without the risk.

During the gameplay in Cyberpunk, the main character V must subdue their body into a below-freezing ice tub to deep dive and connect to the cyber web to meet the AI called Alt. Those with the ability to hack the network, such as V, are called netrunners. From this deep dive scene to the phenomenal work by Taj Francis, I was inspired to take a spin on an extreme body mod. So extreme that your face is augmented onto a metal mask. While playing the game, I always thought about how far I would modify my body if in Night City, and this competition was the perfect opportunity to try it out.

The idea is for the echo netrunner mod to function as your own thermal suit, allowing for control as you enter the cyber web—no need for ice tubs and fear of possible death. The thermal idea comes from nitrogen liquid cooling fans for computers. User’s should feel as if their skin is liquid but also forming some computation underneath. A durable clear casing conceals this liquid. Finally, blinking and raising the eyebrows trigger cyborg eyes to appear and enlarge.

Liquid Shader Effect

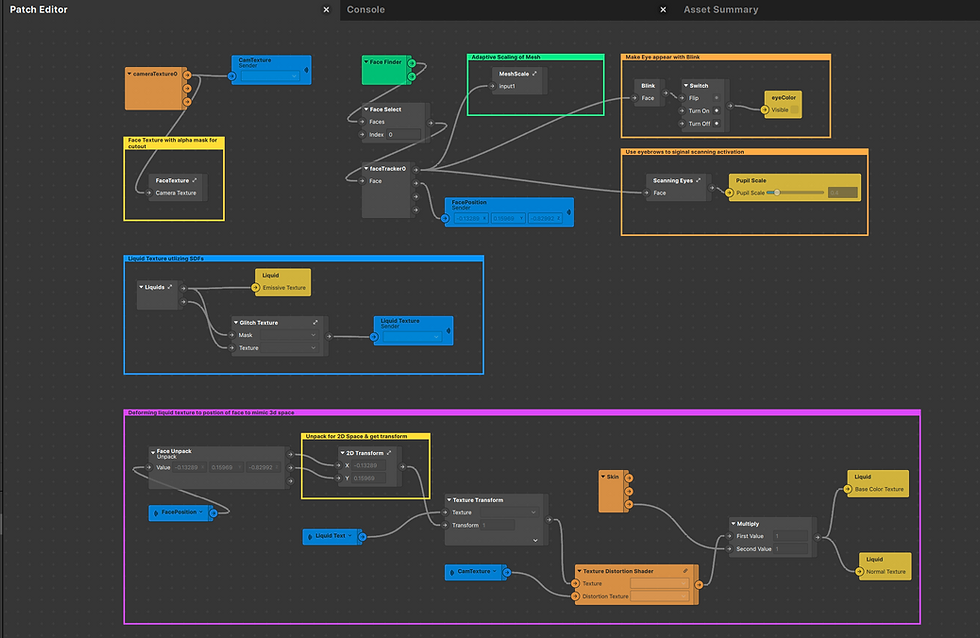

Signed Distance Field (SDFs) were utilized to build the animated shader procedurally. In addition, with the built-in skin segmentation feature, I could isolate the effect on the user’s skin. Spark AR uses a 2D plane (scaled to the size of your device screen) for the skin alpha to follow along.

When approaching the design, I knew illustrating water movement and depth would be vital to minimizing the flat look of the 2D plane the material was projected—accomplishing the look and feel of liquid required plenty of references. I turned to Joseph Gillard’s two books on laying out the fundamentals of water design: The Classic Art of Hand-Drawn Special Effects Animation and The Technique of Special Effects Animation. Great books on the history, art, and science/anatomy of special effects (2D illustrated effects).

A moving body of water would be ideal, but importing animated textures would be computationally heavy, especially for Instagram’s 4MB limit; thus, the path of SDFs was taken. I went for a ripple effect combining SDF patches such as circle, line, twirl,

and annular.

The video above looks into the Liquids group. For the computation feel my initial thoughts were to place numbers above the liquid. However, when building the SDF, I noticed breaks in the effects were revealing the flat lit section of the plane.

I went with the approach of placing the computation under the skin (functioning as a reveal of the computing machine). The computing visual was achieved by converting the custom SDF texture to a black and white mask.

Now, the SDFs and masking elevate the look, but the true key element for depth is combining the created texture with the skin segmentation and applying them as the normal for the shader. Finally, emission and environmental lighting are added to the shader.

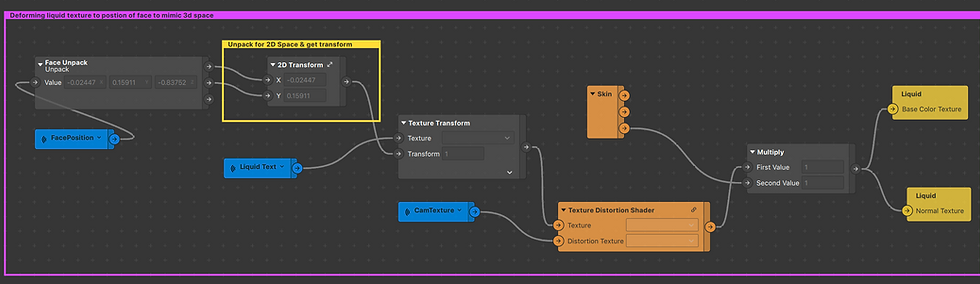

Since the texture was placed on a 2D plane, it meant the skin would not follow along while the user moved - instead, the body movement reveals different parts of the texture. To solve this, I followed along with the process introduced during one of the cyberpunk workshops hosted by Isabel Palumbo (workflow pictured below). With the new body tracker feature, there is potential to upgrade this method - achieving a more realistic result.

Download the entire file below to support and get a more detail look at the process.

Metal Facemask

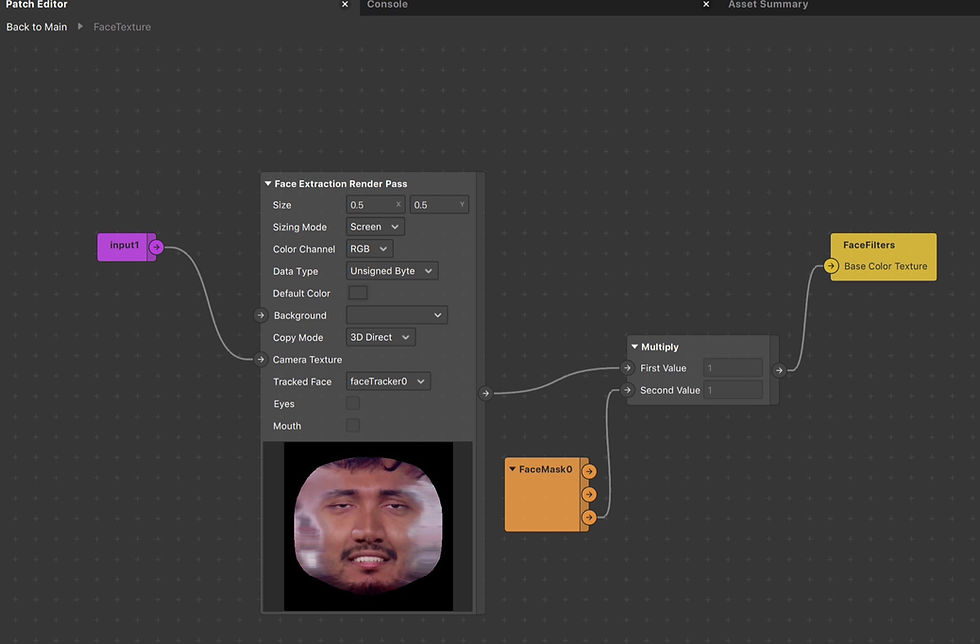

Building the face mask requires two essential parts:

Applying the camera texture of the tracked face

Using the custom mask as the alpha (FaceMask0 in the screenshot)

Enhancing the Look

For the face adding shadows can increase the aesthetic of the objects attaching the face mask to the body.

While happy with the aesthetics of the filter, having to keep your body parts away from one another so they don’t blend was not ideal. Chris Roibal, a phenomenal AR designer with a knack for shaders, graciously tried out my filter and gave feedback. He suggested utilizing render passes vs. the default render pipeline to solve my issue of body parts blending when intersecting. I highly recommend checking out Chris’ filter portfolio and following him on Instagram ( @meta_christ).

Cyberware Challenge Workshops

Active Spark AR Competitions

Check out the Echo Netrunner filter & let me know what you think.

Unfortunately, it will not work on all devices (see if there is an update for IG and plenty of storage on your device if you can’t open it) due to the use of skin segmentation - another aspect to consider when designing your effect.

Comments